In 2016, Casetext, the legal research startup, introduced CARA, a tool that uses artificial intelligence to analyze users’ uploaded briefs and memoranda and find relevant cases the document missed. Lawyers could use it to vet an opponent’s brief for omitted cases or to double-check their own research before submitting the document.

CARA won recognition as new product of the year from the American Association of Law Libraries and inspired other legal research companies to develop brief-analysis products of their own, including EVA from ROSS Intelligence, Clerk from Judicata and Vincent from vLex.

Today, Thomson Reuters enters the fray with the launch of Quick Check, included beginning July 24 at no additional cost with all subscriptions to its legal research platform Westlaw Edge. (It is not available as a stand-alone product.)

Like CARA, Quick Check enables a lawyer to upload any document that contains at least two citations and obtain a list of other relevant authorities that the document does not cite. It also warns if any cases the document does cite may not be good law.

During a preview briefing for the media Tuesday, TR executives said that Quick Check is unlike other brief-analysis products in that it delivers only a limited set of results with only the most highly relevant citations, in order to make it more efficient for lawyers to use.

“To upload a motion or brief to a system and bring back a search result is an easy thing to do,” said Mike Dahn, senior vice president of Westlaw product management, in an apparent allusion to competitors’ products. “We’ve gone beyond the simple way to approach this problem to focus on bringing back items that might have been missed, but also to make it efficient.”

Dahn said that Quick Check had been in development since 2016 and slated to be included in Westlaw Edge when it launched last July. But in TR’s early testing of the product with customers, it found that they liked the concept, but not the delivery, in that it was returning far too many results, often including cases they had already found in their own research.

Dahn and product manager Carol Jo Lechtenberg decided that Quick Check needed to be refined to deliver only a limited set of the most highly relevant results. To achieve this, they turned to the computer scientists at TR’s AI research arm, the Center for AI & Cognitive Computing.

The product they developed, according to the center’s Tonya Custis, senior director of research, “is by far the most ambitious AI feature we’ve launched to date.”

Custis further sought to distinguish this from other brief-check products, saying that it draws on three strengths unique to TR: the company’s editorial expertise in legal research, its engineering expertise in natural language processing and machine learning, and its comprehensive database of primary and secondary legal materials.

Using Quick Check

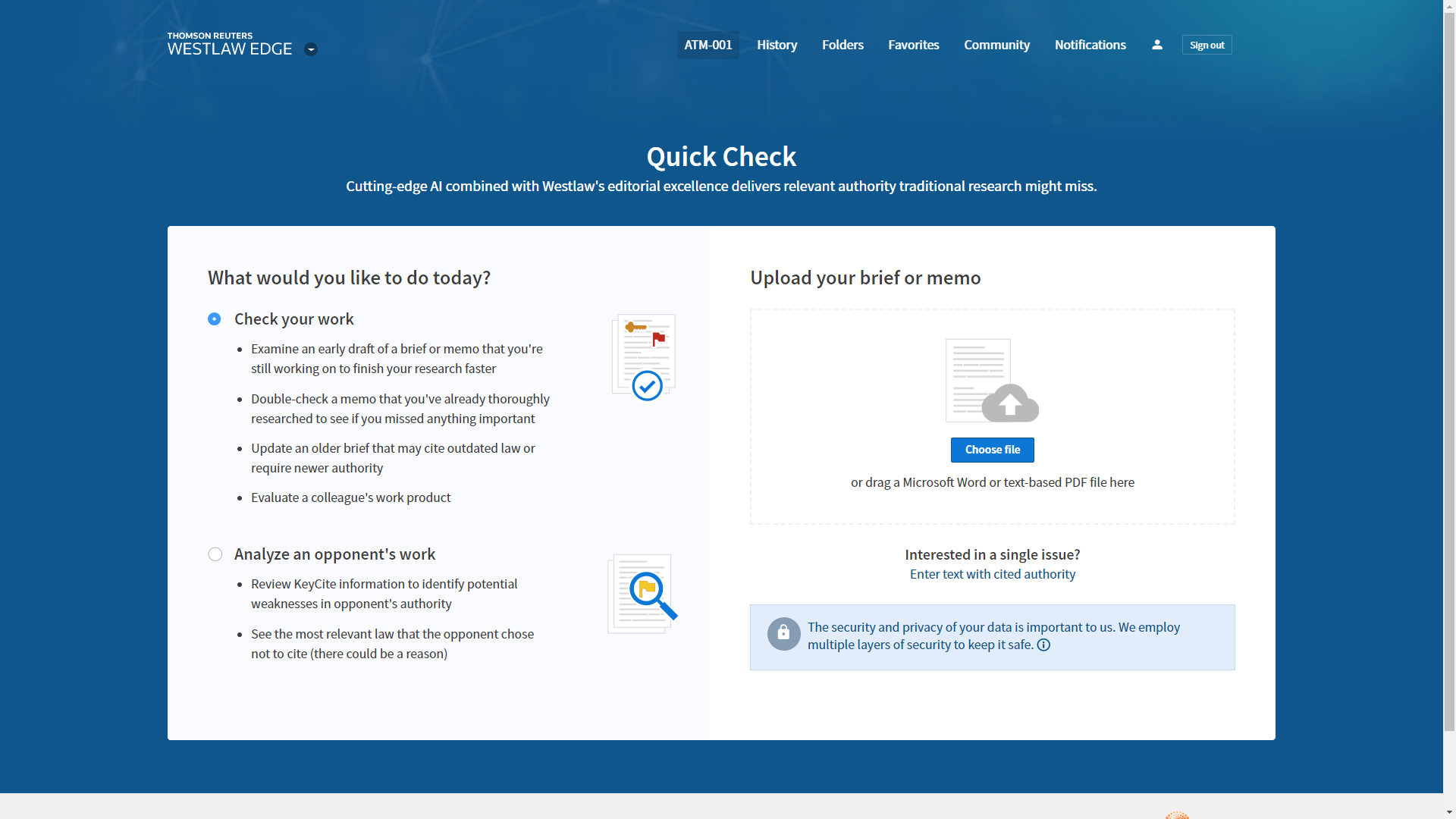

To use Quick Check, you first click a radio button to indicate whether you wish to check your work or your opponent’s. You then upload a Microsoft Word or text-recognized PDF. After about a minute — during which Quick Check extracts the brief’s text and analyzes its language and citation relationships — it returns a list of results showing authorities that are highly relevant to the issues but not cited in the document.

(For security, TR says, it immediately encrypts the uploaded document and then, once the analysis is completed, deletes it from the system.)

Rather than return all relevant results, Quick Check delivers only a limited list of what it determines to be the most highly relevant items. Its suggestions are organized under headings that mirror the section headings in your brief. Although already short, the list of results can be further filtered by document type, jurisdiction, date, and other ways.

“We really wanted to make it efficient,” said Lechtenberg. “That’s why we call it quickcheck. Customers did not want to spend a ton of additional time reviewing results.”

In displaying the recommended citations, Quick Check includes features designed to further enhance the efficiency of the process. One is to highlight the outcome (or holding) of a case with respect to the particular issue. (The outcome may not be shown for certain cases, such as unreported cases.)

For each recommendation, Quick Check also shows a snippet of text to show the relevant context within the case. It also shows the cases in the uploaded document to which the recommendation relates. In addition, certain recommendations bear one of three flags to help the user further evaluate their helpfulness: “frequently cited,” “high court,” or “last two years.”

An eyeglass icon next to a recommended case tells the user it is one the user had previously reviewed or added to a folder in the course of the user’s research.

A separate tab relates to the citations that are contained in the brief. It provides warnings that citations may not be good law, based on their negative treatment in the KeyCite citation service. This strikes me as redundant, in that the lawyer should already have checked any cases used in a brief. But redundancy can sometimes be a good thing.

A final tab provides a complete table of authorities for all the additional citations that Quick Check recommends. This is simply for ease of use to allow the research to download or print all the citations.

When you use this feature to check an opponent’s brief, Quick Check displays a different set of results. The first tab, labeled Potential Weakness, shows any authorities your opponent cited that have been criticized by other courts. A second tab, labeled Omitted Authority, shows cases that appear to be relevant to your opponent’s argument, but that your opponent did not cite, possibly because they were negative to its argument.

When to Use Quick Check

Thomson Reuters envisions that attorneys will use this tool in four ways:

- Update an old brief or memo to identify new authorities or subsequent treatments.

- Analyze opponent’s work to discover weaknesses in their cited authority.

- Analyze an early draft to get a jump start on research.

- Perform a final check on work to make sure no important authority was missed.

Further making the case for how Quick Check differs from competitors’ products, Lechtenberg offered what she sees as its seven unique advantages:

- The algorithm is powered by TR’s proprietary Key Numbers to help find more precise results.

- The user is able to exclude or highlight results that were already viewed in prior research.

- KeyCite warnings are integrated.

- The data that drives it includes thousands of proprietary treatises.

- Massive user data will drive a superior machine-learning algorithm.

- Results are organized by issue for context.

- It is included at no cost in Westlaw Edge.

Developing Quick Check was “one of the toughest things we’ve ever tried to do,” Dahn told reporters Tuesday. But he believes that customers will be quite happy with the resulting product. Not only does it go beyond traditional research methods to bring back highly relevant items, he said, but it also does it in a manner that is highly efficient so researchers don’t spend a lot of time sifting through results.

Further Thoughts

While several companies now offer brief-check products, each varies in its focus and capabilities. Last year, after ROSS unveiled EVA, Casetext staged a “robot fight” face-off comparing EVA to its CARA. No one from ROSS participated, so the fight was not exactly fair, but Casetext made its point that these products differ in what they do and the results they deliver.

(Casetext plans to stage another such robot battle this week at the annual conference of the American Association of Law Libraries.)

One difference between TR’s Quick Check and CARA is that CARA works with any type of litigation document, even if it contains no citations. Quick Check requires that the document contain at least two citations.

This is not a huge deal. But, as I wrote here last year about CARA, it can be useful to be able to upload a document such as a complaint and generate results, as it provides a way to kickstart research.

TR’s approach of limiting results is interesting, but I wonder if it is a solution in search of a problem. I agree with excluding results already cited in the document and with flagging results that were previously viewed. However, I am not sure there is a need to limit the remaining results to just a dozen or so citations, and I am not sure researchers will always want such a limit.

Apparently, Quick Check gets around this by including a “see more like this” link in the results. So, while the initial list is limited, a research who wants to see more can do so.

I’d like to see something akin to a slider bar that allows the researcher to expand or contract the list of results.

Those comments aside, and without having directly tested Quick Check, it appears that TR has developed a strong contender in a field of competing products. Given the foundation of TR’s domain expertise and comprehensive data, and the reputation of TR’s AI center, I have little doubt that this will deliver on its promise of focused, relevant results.

Whether researchers use this as a jump start or a fail safe, subscribers to Westlaw Edge will benefit from access to this feature. Is it reason for non-subscribers to sign up? Given the other products on the market, the answer to that will depend on the totality of the individual’s research needs, not to mention the person’s budget.

Robert Ambrogi Blog

Robert Ambrogi Blog