Casetext today is rolling out three major updates to its legal research platform. One is an updated design and architecture to make the site faster, cleaner and easier to use. But it is the other two updates that I want to focus on, because, in combination, they dramatically enhance the results you receive when performing legal research.

Both updates involve Casetext’s artificial-intelligence, brief-analysis software CARA (Case Analysis Research Assistant):

- First, CARA now works not only with briefs, but with any type of litigation document — and possibly even non-litigation legal documents, based on my testing.

- Second, CARA is now integrated into Casetext’s standard legal research workflow, so that it can be used to enhance keyword queries and deliver results that are far-better matched to the facts and issues at hand.

When Casetext introduced CARA in 2016, it was the first product of its kind on the market. When you uploaded a brief or memorandum into CARA, it would analyze it and generate a list of cases that are relevant to the issues discussed in the document but not mentioned in it. It was a powerful tool to vet an opponent’s brief or proof documents of your own. The American Association of Law Libraries named it new product of the year in 2017 and it spawned a new generation of similar brief-analysis programs.

But for CARA to work, the uploaded document needed to contain case citations. That was because its algorithm compared the cases in the uploaded document to the cases and articles in the Casetext database, looking for other cases that were usually cited alongside those cases.

With today’s update, CARA works with any kind of legal document, regardless of whether it contains citations. You can, for example, upload a complaint that contains no citations and use CARA to find cases relevant to the facts and issues.

That would be good news of itself. But even more notable, in my opinion, is the integration of CARA within the standard legal research workflow. I got to try it over the weekend in advance of today’s release, and I found that I almost always obtained search results that were directly on point to my facts and issues, and did so without having to construct elaborate queries.

Here is how it works.

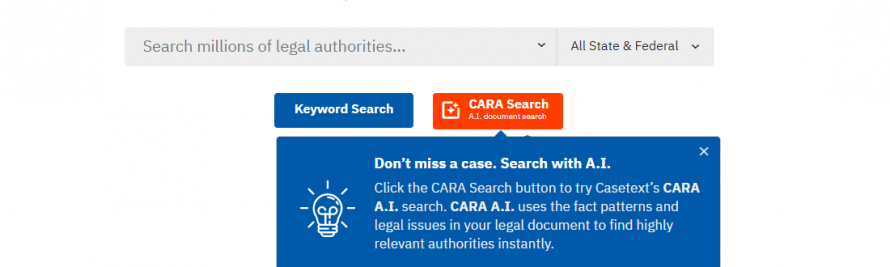

Now when you go to perform a research query in Casetext, the standard search bar has two buttons under it, one labeled Keyword Search and one labeled CARA Search. If you select the latter, you are prompted to upload a legal document.

Once you do, CARA analyzes the document and then contextualizes your query to the facts and legal arguments the document contains. By using use, even a generic query can deliver on-point results.

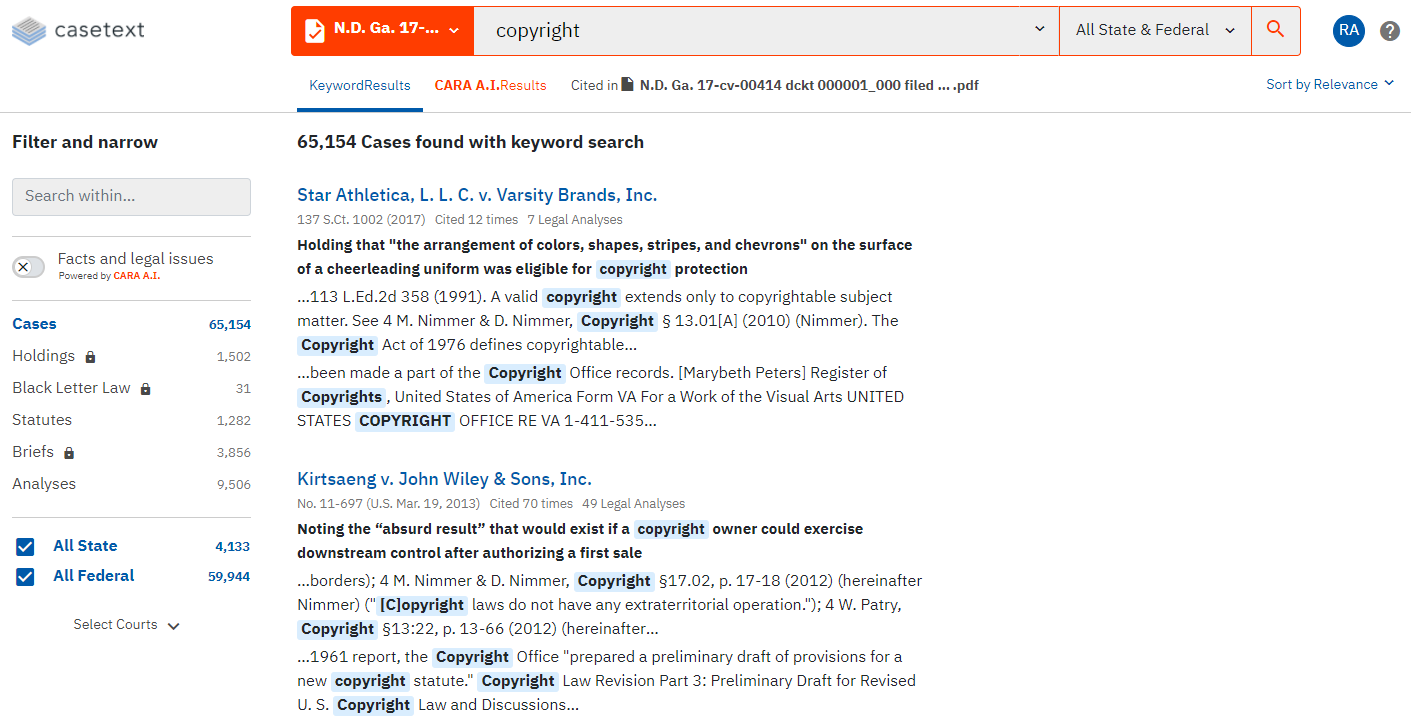

Here is a simple example. Say I am researching whether copyright can vest in state legal materials. If I search just “copyright,” Casetext gives me 65,124 cases. The top results are not helpful, and that would be an awful lot of cases to wade through. I went on to try constructing more complex queries, but still could not put my finger on cases that were on point. My only option would have been to start reading through a long list of results in the hope of hitting on what I wanted.

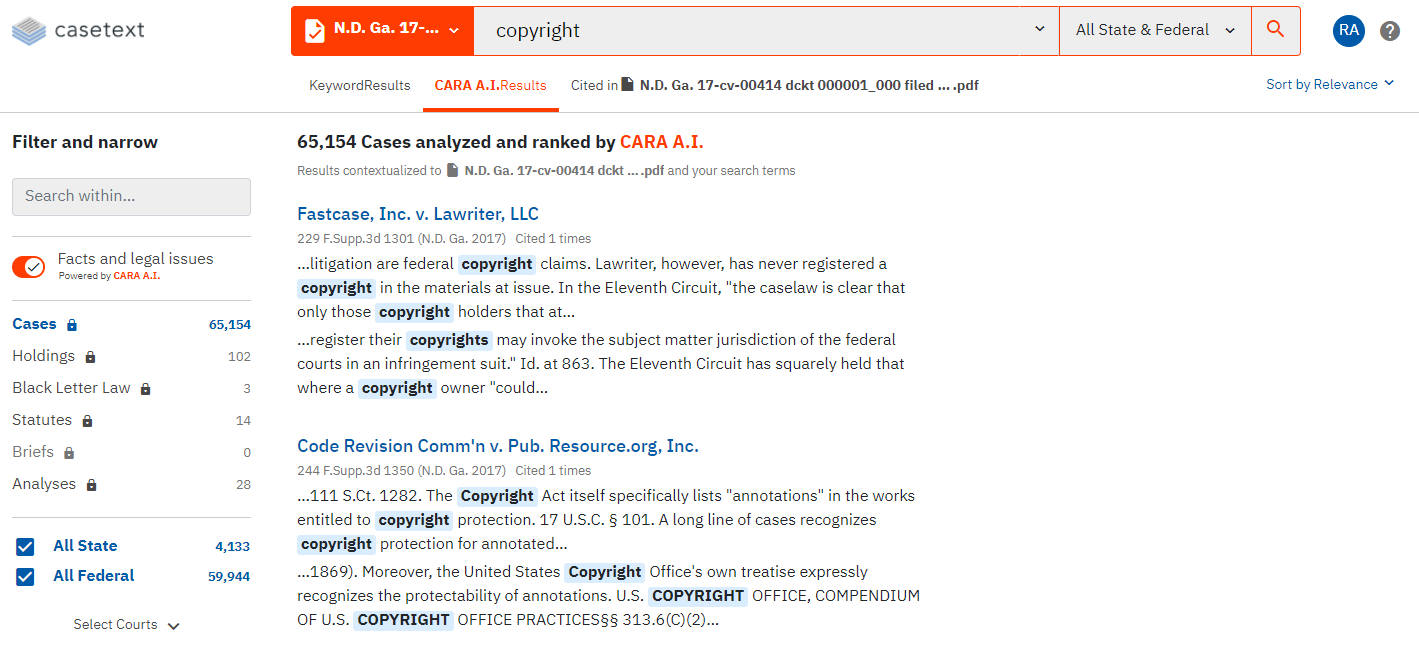

But if I did that same simple query, “copyright,” and also uploaded the complaint filed by Fastcase in its lawsuit against Casemaker over copyright in Georgia administrative materials, then I obtained results that were directly on point. Even though my query remained just the generic term “copyright,” CARA used the complaint to identity the facts and issues I was interested in and deliver on-point results.

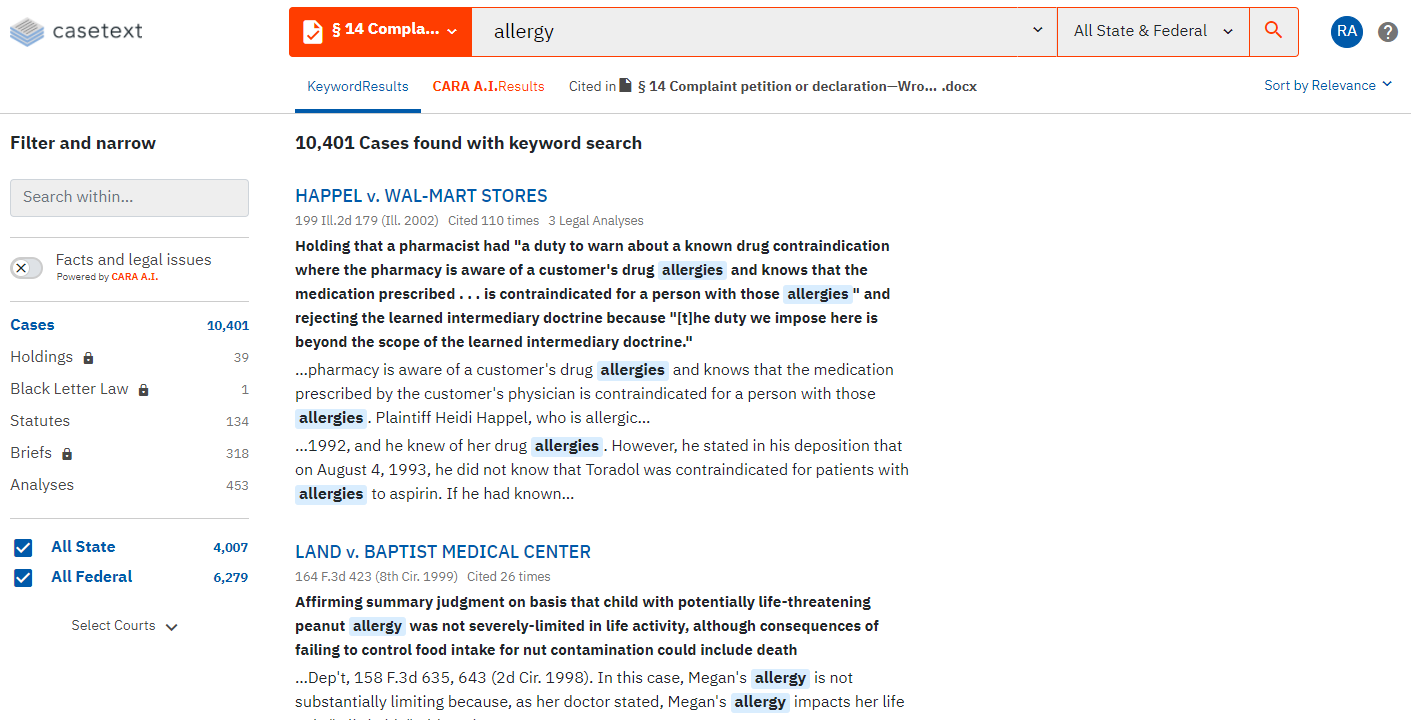

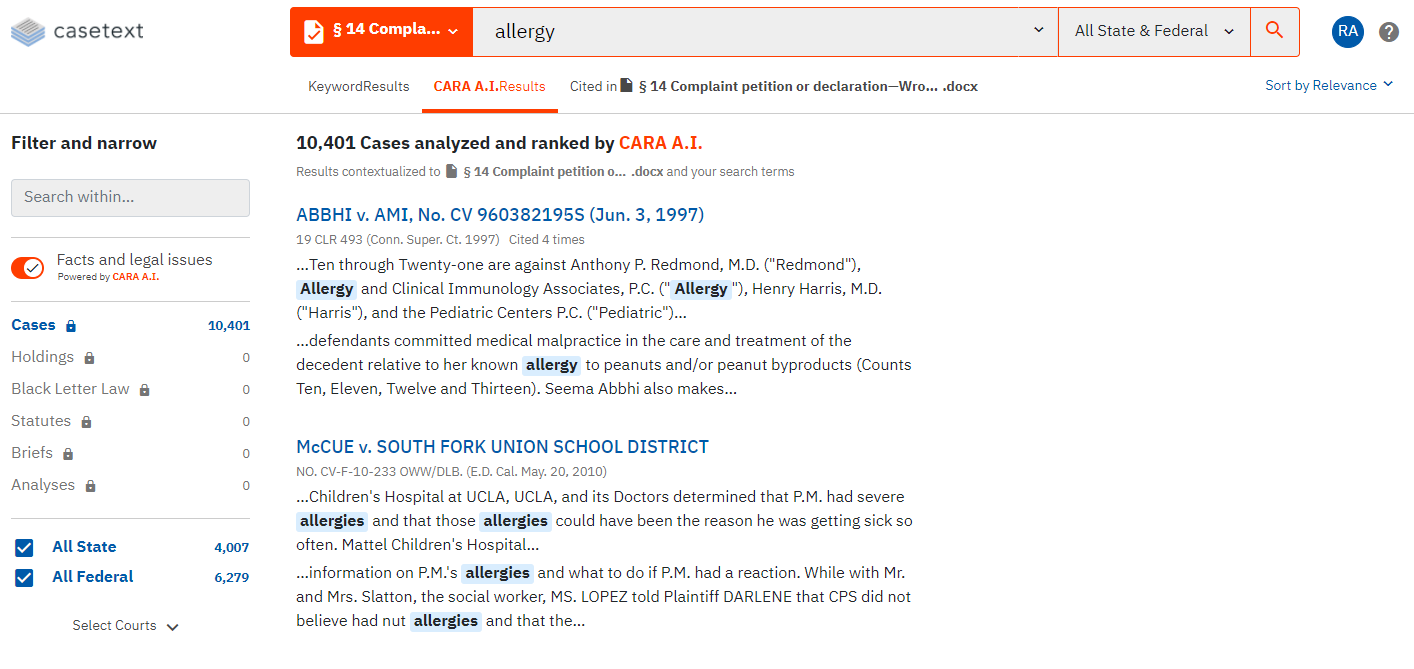

As another example, I searched “allergy” and got 10,401 cases. What I was interested in was liability for death caused by peanut allergy, but Casetext could never have known that based on a generic search for allergy.

If, however, I uploaded a complaint seeking damages for a death caused by exposure to peanut oil, I received cases that were much more directly on point.

It is important to understand that you don’t have to use simplistic queries such as I’ve used here. You can still construct more complex queries. But even then, by combining your query with a legal document, you are likely to get more precise results.

Note also that you can toggle between the CARA results and the keyword results at any time.

Just to push the envelope a bit, I even tried this with a few documents that had no relation to litigation. These experiments produced mixed results. For example, I tried uploading written testimony I had submitted to the state legislature on pending bills, and then tried entering queries related to the subjects of those bills. Twice, the CARA-assisted search performed better than a keyword search. But a third time, it seemed no better than a keyword search. I also tried uploading a memorandum that provided a broad overview of a legal topic and then entered a query relating to a specific application of that topic, with only so-so results.

These experiments with non-litigation documents lead me to think that the CARA AI-assisted research tool works better with litigation documents because they contain more precise discussions of facts and issues.

Those anomalies aside, the CARA AI-assisted research tool strikes me as a major advance in legal research. It addresses a core problem with traditional legal research — that the results are typically too broad. Even a skilled researcher capable of constructing complex Boolean searches often finds it difficult to zero in on highly pertinent results. In my initial testing, the addition of CARA’s AI to a query makes a powerful combination, delivering results that much more closely matched my facts and issues.

Robert Ambrogi Blog

Robert Ambrogi Blog